About 18 months ago, we started getting our first “AI agent” pitches. It was clear this had huge potential. But now we’re seeing the full map in even more clarity.

Quick a recap: We see AI Agents turning labor into software –– a market size in the trillions. Since our first essay on this, we’ve worked with amazing companies in this space and want to do more of it.

But if you’re following this space as closely as we are, you probably have noticed something. Progress and adoption are out of sync.

On one hand, there is rapid technological progress. Just recently, “tool use” (operator, CU, gemini 2.0) and improved “reasoning” (o3, R1, 3.7 Sonnet) emerged as new AI capabilities, both of which represent fundamental prerequisites for AI “agents”—and get us closer to the future. A world where AI Agents can act autonomously and execute complex tasks, at a far cheaper price than we thought possible even a few months ago is very real. Novel capabilities, paired with the continuous improvements in AI performance and cost (see Deepseek and this), are setting the foundation for future exploding demand. That’s the good news.

The less-good-news is there’s still a disconnect between progress and adoption. A gap between the intent to implement AI at work and actually doing it. For example, a recent McKinsey survey of 100 organizations doing >$50M AR recently found that 63 percent of leaders thought implementing AI was a high priority. But 91 percent of those respondents didn’t feel prepared to do so.

It’s very early days. And that’s where you come in. Your primary job is to be a bridge between deep technical progress and mass adoption. You have to figure out how to make people actually see this change, want it, and have it actually work for them.

So how do we get there? It turns out we may be missing a few layers of the AI agent stack.

Actually, we are missing three necessary layers right now, plus a bonus:

- The Accountability Layer: the foundation of transparency, verifiable work and reasoning.

- The Context Layer: a system to unlock company knowledge, culture, and goals.

- The Coordination Layer: enabling agents to collaborate seamlessly with shared knowledge systems

- Empowering AI Agents: equipping them with the tools and software to maximize their autonomy in the rising B2A (Business to Agent) sphere.

We are interested in companies building across each one of these layers. Or connecting them all, like NFX portfolio company Maisa (more on that below).

As we solve these challenges and build this infrastructure, we’ll be able to tackle new and more complex (and valuable) tasks with AI. And once that’s the norm, many more markets we can barely even conceive of now will emerge.

But first, we need these layers and here’s why:

Unlocking Autonomy: From RPA to APA (Agentic Process Automation)

To understand how we are going to unlock full autonomy, we first have to understand a major shift in the way people look at “process automation” –– for lack of a more interesting word.

We are moving from Robotic Process Automation (RPA) to an Agentic Process Automation (APA).

RPA is a multi-billion dollar industry with massive companies like UIPath, BluePrism, and Workfusion, among others. It’s proof of concept that people are more than willing to adopt automation for high value tasks. To understand how we can bring on the agent economy, it’s useful to use RPA as a starting point. Once you see its benefits and limitations, it’s clear how Agents are the natural, and massive, next step.

The benefits: RPA excels at rule-based, structured tasks spanning multiple business systems (100-200 steps). It was effective at capturing company knowledge within rules (e.g., VAT number processing), making automations reliable as long as underlying systems are static.

And, RPA has strong product market fit already.

The limitations: The universe of possible “RPA-able” tasks was always going to be limited because you had to, in detail, be able map out exactly what process the RPA should take. (Move a mouse here, design this spreadsheet that way, etc). And, importantly, expect it to remain the same. Or it breaks.

RPA can only go so far because you can’t process map, and expect perfect exact repeatability in everything you do (some companies can’t even process map at all without hiring outside consultants to “mine” their own processes). In fact, you may not even want that dynamic all the time – part of doing great work is reacting to an environment, intaking changes, tweaking things as you go.

In summary, RPA works extremely well for certain tasks. But RPA is completely inflexible. Reliable, but inflexible.

Enter LLMs — The rise of LLMs represents a major shift. LLMs provide unlimited, cheap, adaptive intelligence. They allowed us to define and collate the context needed to solve more complex problems. And as they began to learn reasoning, they hugely expanded the surface area of automatable tasks.

That said, LLMs aren’t perfect either. LLMs struggle with repetitive steps but work well for unstructured parts of business processes –– this can be a blessing or a curse, depending on how creative vs deterministic you want your outcome to be…

But either way, they’re a black box. You can’t be 100% sure of what the system is going to do, nor why it will do it. Even reasoning traces or model-provided rationales can be completely hallucinated.

Organizations need certainty, or it’s hard to implement any kind of system. Even if you want an LLM to be more creative, that’s useless to you if you don’t understand why and how it’s arriving at certain conclusions.

So where does this leave us?

RPAs have strong PMF. It’s easy to see how your system is working. But tasks are limited and they have no true flexibility or understanding of context. They also require a lot of “pre-work.”

LLMs are more capable with the unstructured information that’s hard to express in rules, but they’re a black box.

The answer for agents and APA: we need a bit of both.

We need the reliability of the RPA system with the flexibility (and affordability) of the LLM. This takes shape as an auditability and context layer that we can implement into the AI agent stack. As a builder in this space you need to be working on this if you want to have a chance at widespread adoption.

The Accountability Layer: An Unlock for Adoption, Learning and Supervision

Think back to your math classes in elementary school. When you were asked to solve a problem, you didn’t get full credit for just writing the answer. You were asked to “show your work.” The teacher does this to verify that you actually understand the process that led to that correct answer.

This is a step that many AI systems, even those that seem to show us logical trains of thought, are missing. We have no idea why AI actually generated those exact actions or chains of thought. They’re just generated.

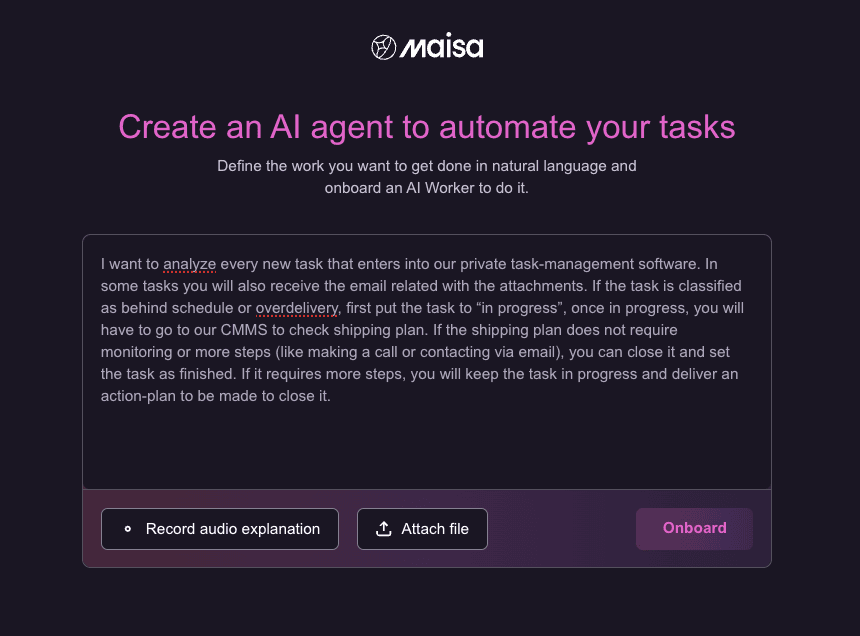

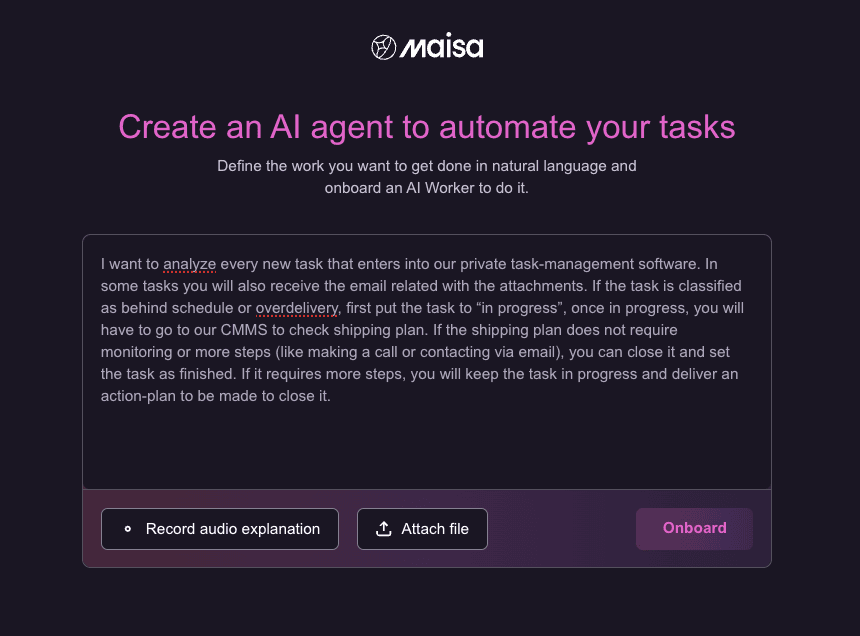

We first became aware of how big of a deal this was when we met Maisa. This metaphor was developed by David Villalón and Manuel Romero, the company’s co-founders– and it perfectly encapsulates the problem with so many AI agent ecosystems right now.

Enterprises feel like they’re supposed to blindly trust the AI’s thought process. Early during product development, Maisa met with a client that said they needed to prove exactly what was being done by their AI systems for auditors. They needed evidence of each step taken and critically why those steps were taken at all.

Conversations like that gave rise to Maisa’s concept of “Chain of Work” –– a factor we now believe will be key to AI agent implementation in the workforce.

At the heart of it, sits Maisa’s Knowledge Processing Unit (KPU)— their proprietary reasoning engine for orchestrating each AI step as code rather than relying on ephemeral “chain-of-thought” text.

By separating reasoning from execution, they achieve deterministic, auditable outcomes: every action is logged in an explicit “chain-of-work,” bridging the best of LLM-style creativity with the reliability of traditional software. Unlike typical RPA or frontier-lab solutions, which remain mostly “guesswork” behind the scenes, the KPU fosters trust: teams can see precisely why and how the AI took each action, correct or refine any step, and roll out changes consistently.

I like to joke with founders that I work with that the best B2B software products are those that help people get promoted. Those that internal stakeholders smell that they can get big recognition by bringing it in. That’s the reward that AI promises today, but it also comes with risk. No one wants to bring in a system that ultimately doesn’t work.

Building this accountability tips the risk-reward ratio back into your favor. It’s a given that AI automation is a huge win for enterprises –– the key is reducing the risks (real and perceived) associated with implementation.

Maisa’s “Chain-of-work” helps with that ratio. And it’s working.

The Context Layer: What Makes a Great Employee

What makes a great hire? It’s not just the credentials. It’s not just the experience. Ultimately an employee’s success in your organization will depend on their style, adaptability and, critically, also on your ability to communicate what and how you want things to be done.

Example: you hire a marketer who takes the time to understand your brand’s voice and why you say what you need to say, rather than just churning out bland marketing copy.

Example: you hire an HR person that understands that he/she is actually building company culture – not just creating an employee handbook.

This is the key reason GPT-4 isn’t an amazing employee. No matter what you do, GPT-4 doesn’t get you nor your company. It acts according to a set of rules, but it lacks the nuance and decision-making context you’d expect from a human employee. Even if you were to articulate those rules to an AI workflow or custom GPT, you’d never get all of them.

For a few reasons:

- A lot of what we learn at a new job isn’t written down anywhere. It’s learned by observation, intuition, through receiving feedback and asking clarifying questions. It’s usually the ability to access and incorporate the “unwritten stuff” that distinguishes a great from a good employee.

- The actual stuff that is written is all in unstructured data. Not in a database, but in PDFs with instructions, code, even in company emails…

- Most AI tools, at the moment, aren’t plugged into the unstructured data ecosystem of a company, let alone the minds of the current employees.

We’ve talked about how one of the advantages of Agents vs RPA is precisely this contextual understanding. It provides adaptability and eliminates the need for insanely costly “process-mapping.”

Organizing this knowledge is possible, and it’s been proven in more constrained environments. (Industry-standard retrieval-augmented generation (RAG) or embeddings are a decent start, but they eventually break under large data sets or specialized knowledge, making this a challenge).

Maisa approaches this differently by developing a Virtual Context Window. VCW bypasses these complexities by functioning as an OS-like paging system. Digital workers “load” and “navigate” only the data they need per step, giving them effectively unlimited memory and zero collisions—no finetuning or unwieldy indexes needed. Crucially, the VCW also doubles as a long-term know-how store for each worker, meaning they adapt to new instructions or data seamlessly.

A critical part of the AI agent stack must be this contextual layer. Your customer will think of this as space where they “onboard” an AI worker into their organization’s unique approach and style.

The challenge is to devise a way to encapsulate that context for your customers, and translate that into your agent’s DNA. Both at the moment of onboarding and in the future – enabling “usage” of that knowledge and continuous learning.

Some other initiatives in this broader area we have seen:

- Unstructured data preparation for AI agents

- Continuous systems to gather and generate new context data

- Systems that allow us to finetune models more easily

- Memory systems and long context windows. See one of the latest advancements here.

- AI with an intuitive understanding of emotional intelligence and personality which will help with all of the above. See our piece Software with a Soul.

The Coordination Layer: Managing the Agentic Workforce

In the future, businesses are probably going to manage a set of AI agent employees. You’ll have agents for customer service, sales, HR, accounting….and it’s likely that different companies will provide each of these workforces.

It’s already starting to happen. We’re seeing job listings for AI agents in the wild:

Those agents will have to “talk” to humans, and to each other. Those agents will also require permissioning and rules, with important considerations for privacy and security.

This is an interesting crux moment in the development of the AI Agent space. It seems obvious that we will have swarms of agents speaking to one another. But you could imagine a world where that isn’t the case. You could see a dynamic where companies (likely incumbents) look to own the whole system of agent building and managing. In that case, they would probably look to discourage collaboration with other systems. A winner-take-all dynamic.

That said there’s not a ton of evidence to suggest any AI products have developed that way so far. With the exception of GPUs, most of the raw materials needed to build AI products and systems (like foundational models) aren’t owned by one or two companies. We have OpenAI, Claude, Gemini, Mistral and now, DeepSeek.

With the sheer number of startups we’re seeing in the agent space right now…it seems more likely that someone deep in the AI agent world will solve the communications and permissioning problem faster than an incumbent can shut them out.

Ultimately, a thriving agent ecosystem is a win-win for everyone. From the customer perspective, it provides you with an endless pool of potential AI talent, and the ability to choose the best fit for you. From a founder’s perspective, it opens the door to network effects. Each new agent that’s added to the ecosystem actually benefits you, if you are the one facilitating the connections.

In that case, inter-agent communication is essential.

Companies on the forefront of this wave already understand this and are building multi-model capabilities. (Maisa’s KPU, for example, is model agnostic.) In a world where foundational models are continuously improving, flexibility is essential. But we will also need systems for agents to safely exchange and share knowledge.

This is something to be thinking about now, as all these agent ecosystems get up and running.

The Frontier: Giving AI Agents Tools for the Job

Once we tackle accountability, context, and coordination, we get to the fun stuff.

We’re already seeing a market emerge for tools for AI Agents. Software that will make them better at their jobs. Some are calling this nascent space “B2A” (business to agent).

This will be a major unlock that takes agents from rank-and-file workers, to autonomous decision makers. Imagine if humans weren’t allowed to use calculators or computers…once you deploy an agent, you have to set them up for success.

We’re already at the beginning of this world. We’ve seen ChatGPT use a web browser. Claude, move a cursor around a screen. ElevenLabs can give them a voice. But we can imagine this world getting 10x better.

Agents will need to be able to pay one another for services. They’ll need to be able to enter into contracts. Or plug into systems where humans and programs already interact.

Apps can inspire infrastructure, and vice versa. Within the AI agents space, we’re seeing this dynamic as well. These infrastructural layers will inspire “apps” (new types of agents + tools for agents) which will inform progress at the infrastructure layer.

Creating tools where agents themselves are the end user is a massive area of white space. We’re watching it closely.

What it Takes to Onboard AI Agents

Let’s be clear: we are all in on agents and excited about the potential they hold. To us, and most of the founders we work with, the world where we are all using AI agents each day is an inevitability.

Part of this excitement is building this new ecosystem from the bottom up. We have to really understand what it takes to get people to adopt a whole new computing paradigm. There is a lifecycle to these things, and we’re only at the beginning.

Creating these layers will be key to making AI agents tools that most people trust and use every day. These are the challenges that will catapult us over the adoption gap.

We’re excited for the companies that recognize this challenge and dove right in. They’re the new infrastructure upon which the AI agent revolution will be built.

*Thank you to David Villalon, Jochen Doppelhammer, Lukas Haas, Renzo Viale and Felix Meng for notes on this essay.

As Founders ourselves, we respect your time. That’s why we built BriefLink, a new software tool that minimizes the upfront time of getting the VC meeting. Simply tell us about your company in 9 easy questions, and you’ll hear from us if it’s a fit.